Have you ever used ChatGPT, Claude, or other AI content writing tools and wondered: Why is it sometimes extremely accurate, but other times it 'hallucinates' and writes things that are totally off the rails?

The answer doesn't lie in the AI suddenly being smart

or stupid,

but in how we set the control knobs

that manage its output. The three most important knobs

that determine the balance between accuracy and creativity in AI are Temperature, Top P, and Top K.

In this article, we will dive deep into these concepts in the simplest, easiest-to-understand way so you can fully master your AI.

Why adjust these parameters when using AI?

Large Language Models (LLMs) like GPT-4 don't actually understand

text like humans do. Basically, they are extremely complex probability prediction machines.

When you provide a prompt, the AI's sole task is to guess what the next word should be.

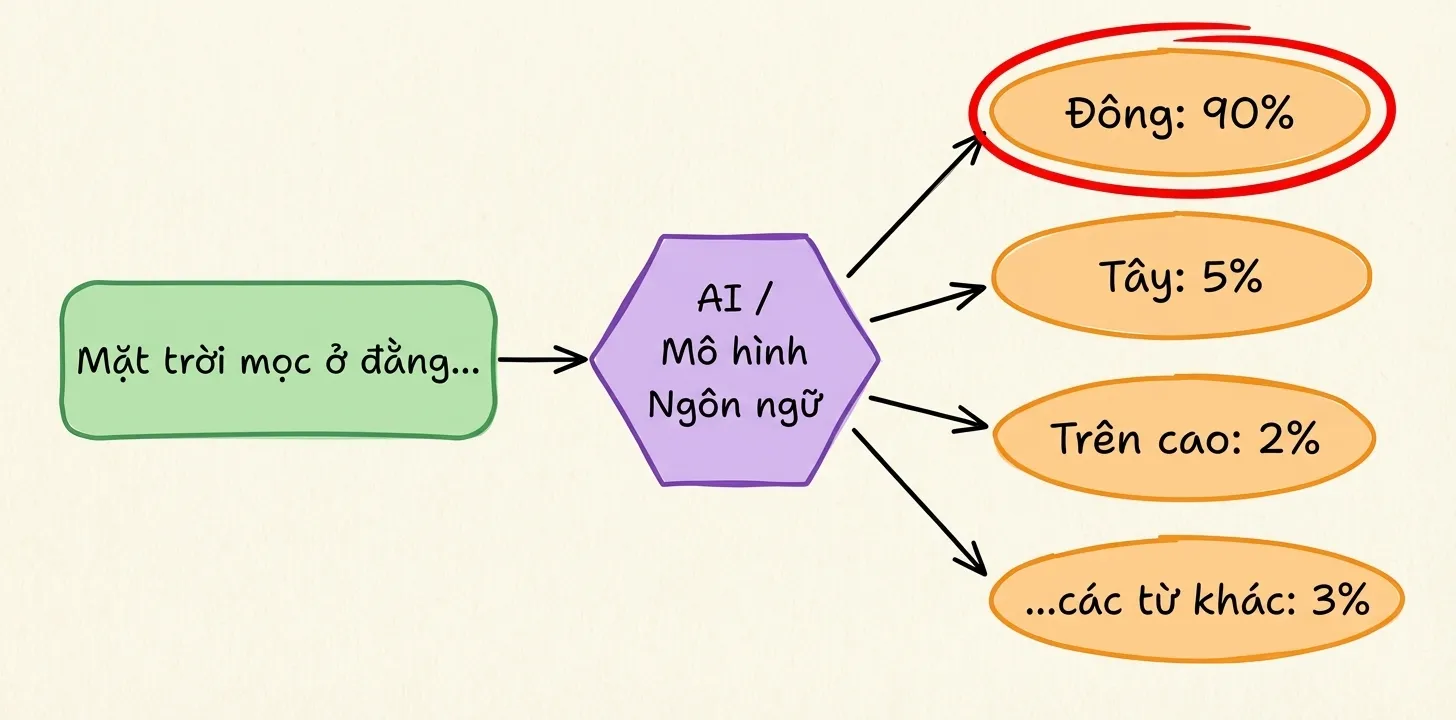

For example, if the prompt is: The sun rises in the...

, the AI calculates the probability for the next word:

1

2

3

4

East: 90%

West: 5%

Sky: 2%

...other words: 3%

If the AI always chooses the word with the highest probability (here, East

), it will be very accurate but boring and repetitive. Conversely, if it occasionally chooses words with lower probability (like West

), it becomes more creative,

but also more prone to being factually incorrect (AI hallucination).

The parameters Temperature, Top P, and Top K are the tools you use to tell the AI when to play it safe and when to take risks.

What is Temperature?

Temperature is the most common parameter for controlling the randomness of AI output. Imagine it as a slider that adjusts the craziness

or creativity

of the model.

Temperature values usually range from 0 to 1 (some models allow up to 2).

Low Temperature (Near 0)

Mechanism: When the temperature is low, the AI becomes very conservative. It makes high-probability words even more dominant, and low-probability words are almost eliminated. The AI will almost always choose the most likely word.

Result: The output is very consistent, accurate, factual, and less creative. Answers are usually concise and straight to the point.

When to use:

- When you need factual, accurate answers (e.g., looking up information, solving math problems).

- When writing code (programming requires absolute precision).

- When you need consistency (asking the same question multiple times yields the same answer).

Simple Example:

Ask AI: Quick answer: What is the capital of Vietnam?

Temperature = 0.0

→ AI answers:

The capital of Vietnam is Hanoi.

Ask 10 more times → still Hanoi, no beating around the bush.

Result:

The output is very consistent, accurate, factual, and less creative. Answers are usually concise and straight to the point.

High Temperature (Near 1 or higher)

Mechanism: When the temperature is high, the gap between the most likely word and other words narrows. Rare words now have a higher chance of being chosen.

Result: The output is more diverse, creative, and surprising, but also more prone to rambling and has a higher risk of hallucination

(fabricating information).

When to use:

- Creative writing (poems, stories, scripts).

- Brainstorming new ideas.

- When you want the AI to

talk more

and be more expressive.

Simple Example:

Same question: Quick answer: What is the capital of Vietnam?

Temperature = 2.0

→ AI might answer:

The capital of Vietnam currently is Hanoi. Hanoi is the cultural, economic, educational, and political center of the entire country.

Ask again →

Hanoi is the capital of the Socialist Republic of Vietnam. It is a municipality under the central government and one of two special-class cities in Vietnam. Hanoi serves as the political, cultural, and educational hub, and is one of the two key economic centers of Vietnam.

The information is still correct, but the phrasing is completely different.

Result:

The output is more diverse, creative, and surprising, but also more prone to rambling and has a higher risk of hallucination

(fabricating information).

What is Top P?

If Temperature determines how risky the AI is allowed to be,

then Top P determines the range in which the AI is allowed to take risks.

Top P (also called nucleus sampling) does not change the probability of words, but only selects a group of words that are good enough

, and then the AI must only choose from within that group.

How does Top P work?

Top-p selects the next token based on the smallest group of tokens whose cumulative probability is at least p.

Simple Idea

- The model predicts probabilities for all possible next tokens.

- Sorts tokens by probability in descending order.

- Takes from the top down until the cumulative probability >= p → this is called the

nucleus

. - Randomly selects only from within that nucleus group (ignoring everything outside).

Simple Example

Suppose the model is writing the sentence: Today the weather is...

And the probabilities for the next token are:

1

2

3

4

5

6

7

- "beautiful" : 0.40

- "rainy" : 0.25

- "cold" : 0.15

- "hot" : 0.10

- "bad" : 0.05

- "harsh" : 0.03

- (others) : 0.02

If Top P = 0.80

Cumulative sum from largest to smallest:

beautiful

0.40 (total 0.40)rainy

0.25 (total 0.65)cold

0.15 (total 0.80) → reached 0.80

→ Nucleus = {beautiful

, rainy

, cold

}

The model will randomly choose only from these 3 words, based on their probability ratios.

If Top P = 0.95

Cumulative sum:

beautiful

0.40 (total 0.40)rainy

0.25 (total 0.65)cold

0.15 (total 0.80)hot

0.10 (total 0.90)bad

0.05 (total 0.95) → reached 0.95

→ Nucleus = {beautiful

, rainy

, cold

, hot

, bad

}

More choices, the sentence can be more diverse, sometimes more unique.

Top P Summary

- Low Top P (e.g., 0.3–0.5): few choices → stable, less rambling.

- High Top P (e.g., 0.8–0.95): many choices → more creative, but can be impulsive.

What is Top K?

Top-k selects the next token by:

- Model predicting probabilities for all tokens.

- Keeping only the K tokens with the highest probabilities.

- Randomly selecting among those K tokens (based on normalized probabilities).

Top-k = only allow choosing from the top K most popular options.

Simple Example

Suppose the model is writing: Today the weather is...

Next token probabilities:

1

2

3

4

5

6

7

- "beautiful" : 0.40

- "rainy" : 0.25

- "cold" : 0.15

- "hot" : 0.10

- "bad" : 0.05

- "harsh" : 0.03

- (others) : 0.02

If Top K = 3

Keep the 3 highest probability tokens:

beautiful

: 0.40rainy

: 0.25cold

: 0.15

→ Model can only choose from {beautiful

, rainy

, cold

}

Tokens like hot

, bad

, harsh

are completely eliminated.

If Top K = 5

Keep:

beautiful

: 0.40rainy

: 0.25cold

: 0.15hot

: 0.10bad

: 0.05

→ More choices → more diverse sentence, but still within the popular zone

.

How is Top-k different from Top-p?

- Top K: Always keeps exactly K tokens, regardless of how

concentrated

orscattered

the probability distribution is. - Top P: The number of retained tokens changes dynamically so that the total probability >= p.

Comparison: Temperature – Top P – Top K

| Parameter | Quick Understanding | Main Influence | What if Low? | What if High? |

|---|---|---|---|---|

| Temperature | AI's Riskiness |

Creativity / Randomness Level | Rigid, safe, very logical | Flighty, creative, prone to rambling |

| Top P | Allowed vocabulary range | Flexibility in word choice | Strict, little deviation | More natural, diverse |

| Top K | Max words AI can choose from | Width of choice at each step | Few choices, very rigid sentences | Many choices, easy creativity |

In Summary

- Temperature: Is the AI allowed to take risks?

- Top P: What % of the most logical words can the AI use?

- Top K: How many words can the AI look at to choose from?

Combined Example of Temperature, Top P, Top K

Sentence: This weekend I want to...

Probabilities:

1

2

3

4

5

- play (0.30)

- travel (0.25)

- rest (0.20)

- explore (0.12)

- escape debt (0.08)

- Temperature

- T = 0.3 → almost always outputs play

- T = 1.0 → travel / rest

- T = 1.5 → might output escape debt

- Top-p

- p = 0.7 → play, travel, rest

- p = 0.9 → adds: explore, escape debt

- Top-k

- k = 2 → only chooses: play, travel

- k = 4 → adds: rest, explore

Quick Presets by Purpose

| Purpose | Temperature | Top P | Top K |

|---|---|---|---|

| Code / Technical | 0.1 – 0.3 | 0.8 | 20 |

| Blog / SEO | 0.5 – 0.7 | 0.9 | 40 |

| Creative / Ideas | 0.9 – 1.2 | 1.0 | 50+ |

Conclusion

AI doesn't become hallucinatory

or worse

randomly. The thing that determines the quality of the answer lies in how you adjust Temperature, Top P, and Top K. Depending on your settings, the AI will prioritize creativity and might ramble, or it will become accurate, stable, and on-point. Understanding these three parameters means you are no longer relying on luck, but actively controlling how the AI generates its answers.